Listening To The Algorithm

Thoughts on a new feature at NotebookLM that turns your sources into an extended audio conversation.

One of the very first demos I saw when I began working with Google in late July of 2022 was a series of prompts that asked their PALM model to discuss black holes at various levels of explanation: Explain it to me like I'm ten, like I'm a grad student, using sports metaphors. At that point PALM was almost certainly one of the two most powerful language models on the planet, though Google had never exposed it directly to users. So just getting my hands on it was interesting enough. The explanations it served up were captivating, if a little simplistic by today's standards. But what most caught my eye was how much command the model had over the different forms of explanation. It seemed a little uncanny that you could write just a few words of instructions in English prose ("explain it to me at a high school level using chess analogies") and the model would so readily shift from one explanatory mode to another, keeping the facts more or less intact in the move.

It seemed like a remarkably high-order skill for a technology that had well-documented problems with things like basic math and hallucinations. Over time I came to realize that it was just a slightly more sophisticated version of something that neural nets had been good at for more than five years: translation. Take this input and turn it into this new output, but keep the meaning intact. We'd first seen the power of this with the launch of Google Translate, taking English text and magically turning it into Spanish, arguably the first breakthrough in deep learning that was widely adopted by consumers. But by the time I arrived in 2022, the models had graduated up to translating astrophysics into elementary school-friendly metaphors.

The ability to translate or summarize is one of those edge case capabilities that can confuse things more than clarify in the context of debates about "artificial general intelligence." Until a few years ago, humans were the only entity on the planet that could translate information from one format to another in this way. Now a computer can do it, faster and more reliably than any human on the planet. Thanks to the underlying Gemini model, NotebookLM can effortlessly shift back and forth between almost forty different languages; you can read a document in Japanese and ask questions about it in Spanish and it won't miss a beat. That is a giant step forward.

But summarizing and translating are not synonymous with thinking. On the most basic level, translation tasks do not involve generating original ideas. If your translator keeps inserting his own theories while translating your work into another language, that's a bug, not a feature. Translation and summarization are maybe the most striking examples of AI's uneven development: the models are great at some things that have traditionally been exclusively the province of human intelligence, and surprisingly lame at tasks that a $50 Radio Shack calculator could have done in the 80s.

In part because those early “explain it to me like I’ve five” experiences left such an impression on me, and in part because it’s generally just a good strategy to lean into what the models do well, many of the early NotebookLM features (back when it was an internal prototype code-named Tailwind) were variations on this theme. We had a feature we called “Explanatory metaphor” that would take any text you gave it and generate a helpful metaphor to describe the core ideas in the passage. We did a number of experiments with different summarization formats; back then, there was still something magical in giving the model a few complicated paragraphs and watching it turn them into reader-friendly bullet points.

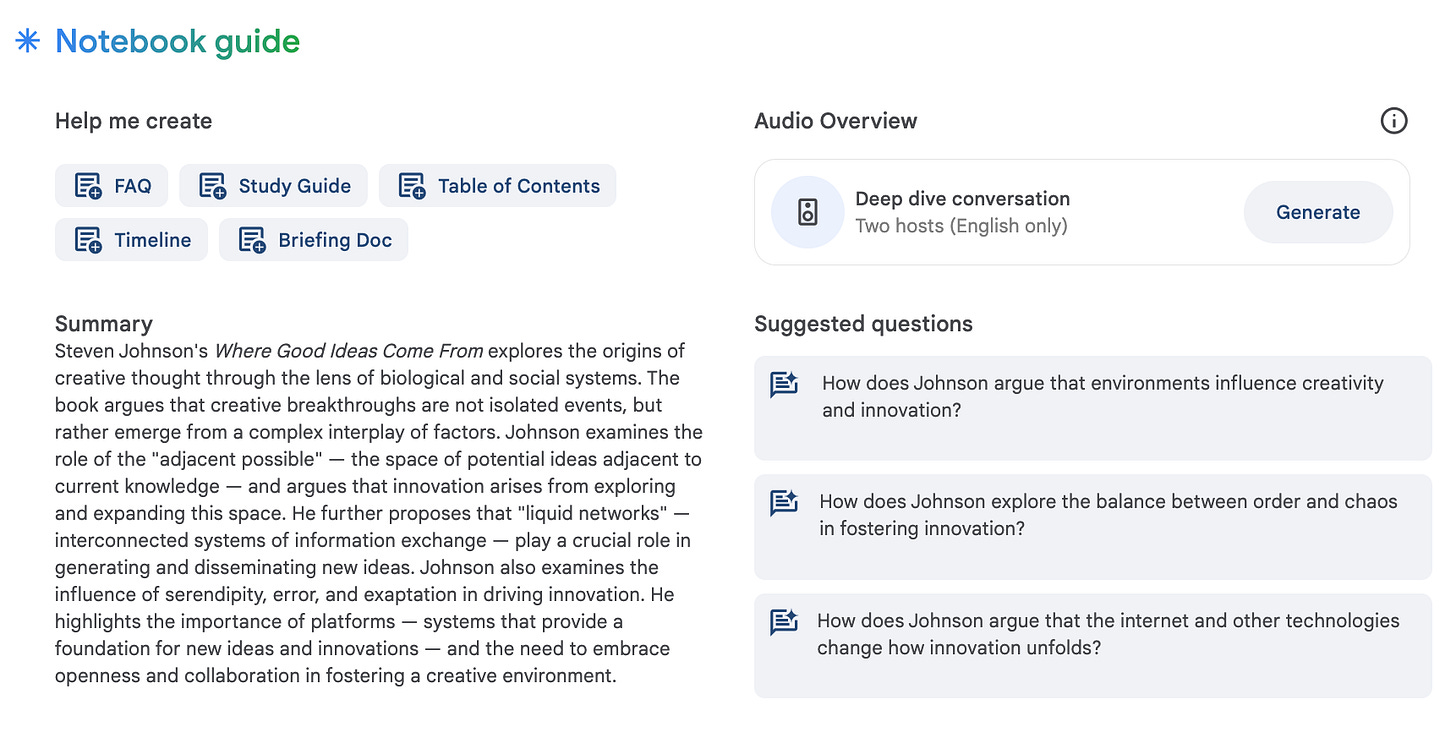

Over time, the possibilities of translating information from one format to another expanded, and it became possible to work with much larger documents or collections of documents. Because NotebookLM is fundamentally a tool to help you understand things, we’ve started to put more and more emphasis on allowing our users to convert whatever information they’re working with into the structures that work best with their way of thinking or learning. That was the underlying principle behind the suite of tools we introduced in June with Notebook Guide, which lets you convert your sources into formats like study guides or FAQs with a single click.

All those formats had one thing in common: they were all text-based. But not everybody learns or remembers most effectively through reading. Many of us are auditory learners, or just prefer to take in new information while walking around or driving, when reading is impossible. And we know from the massive rise in podcast listening that one of the most powerful ways to understand a topic is to listen to two engaged, thoughtful people having a conversation about it.

But what if it doesn’t have to be actual people having the conversation?

On Wednesday, the NotebookLM team rolled out a new feature called Audio Overviews, a new addition to Notebook Guide that takes your collected sources and uses them to generate a roughly ten-minute “deep dive” audio conversation between two AI hosts. Here’s what I got when I created an audio overview based on the full text of The Infernal Machine, my latest book:

Pretty wild, right? In the last 48 hours, we’ve seen an amazing amount of interest in this feature, with people doing everything from generating overviews based on their CVs (apparently very good for your self-esteem), uploading recent corporate documents to create a company-wide “Week in Review” episode to share with colleagues, or even uploading the draft of their fantasy novel manuscript to hear which storylines were most conversation-worthy. Google’s Jeff Dean has a fun twitter thread where he shared out a bunch of examples of what people are doing with the tool.

Audio overviews take up to five minutes to generate. There are a number of edit cycles happening behind-the-scenes to ensure the content is faithful to the source material, engagingly described, and delivered with convincing human intonation. (No one wants to listen to two Siris in conversation.) In that discussion of Infernal Machine, the AI hosts riff on the major themes and introduce some of the most interesting characters from what is a fairly complicated book. They do mispronounce Alphonse Bertillon's name, and they describe the Ludlow massacre as happening in 1913, not 1914, though the labor dispute that led to it did in fact begin in 1913. But over all, it's a remarkably lucid and thorough summary of a 300-page book, entirely generated by software.

One thing I’ve noticed listening to dozens and dozens of these is that while the conversation has a playful tone throughout—there’s a lot of bantering and an arguably excessive fondness for puns—I’ve never actually heard either of the hosts say something genuinely funny. I imagine over time we will probably allow you to dial the tone of the discussion to fit your own tastes. (There's another great conversational audio experiment at Google called Illuminate, which is currently more focused on scholarly texts and generally has a more serious tone.) But I’m not totally convinced that the models are capable of true conversational humor yet. This may just be another place where artificial intelligence is developing unevenly, and if it is, I suspect it’s because humor is in many ways the opposite of translation and summarization; humor is all about surprise, about defying expectations, going off script in just the right way.

In two years, the models have gone from producing a few sentences of helpful astrophysics metaphors to generating a convincing ten-minute audio conversation based on an entire book. That is astonishing progress by any measure. But they still don’t know how to make us laugh.

I ran the audio feature on a short essay I'd written, and it's astonishing to hear my thoughts being expressed by a "conversation. " While certainly amusing, I'm not sure yet how to make the audio feature actually useful to my writing process. Be glad to hear how others are using it to advantage.

Yesterday I made a prototype Vlog audio track drawn from a perplexity.ai session, downloaded the audio file and layered it CapCut underneath matching stock footage. NotebookLM did a great job synthesizing the uploaded source content and the voices were credible and listenable. I would love to see NotebookLM add the ability to edit a transcript of the generative AI podcast talk track to clean up occasional word glitches and even regenerate small sections of dialogue, but keep the remaining conversation intact. This would be similar to the text to audio repair features in Descript.