Revenge Of The Humanities

Like any technological revolution, AI is putting a premium on a new set of skills. Only this time, the skills might be best acquired in a writing workshop or a philosophy seminar.

[Quick news flash before we get to our main programming: as of yesterday, NotebookLM is available in over 200 countries and territories in the world. Read our announcement here.]

Last week I had the honor of delivering the commencement address at my youngest son's high school graduation, which was, as you might imagine, a bittersweet occasion. In the commencement talk, one of the ideas I touched on briefly was something I've mentioned before here at Adjacent Possible: the somewhat paradoxical idea that, thanks to the AI revolution, we are entering a period where it will be a great time to be a humanities major with an interest in technology. The conventional story of course is that we're in the middle of a mass exodus from English or History as majors—sometimes blamed on the excesses of cultural theory, sometimes on the fact that all the money is in Computer Science and Engineering right now. And to be sure, the exodus is real. But there is a case to be made that college and grad students are over-indexing on the math and the programming, just as the technology is starting to demand a different set of skills.

The simple fact of the matter is that interacting with the most significant technology of our time—language models like GPT-4 and Gemini—is far closer to interacting with a human, compared to how we have historically interacted with machines. If you want the model to do something, you just tell it what you want it to do, in clear, persuasive prose. People who have command of clear and persuasive prose have a competitive advantage right now in the tech sector, or really in any sector that is starting to embrace AI. Communication skills have always been an asset, of course, but thanks to language models they are now a technical asset, like knowing C++ or understanding how to maintain a rack of servers.

This is, of course, a variation on Andrej Karpathy's quip from more than a year ago: "The hottest new programming language is English." But it's more than that, I think. The core skills are not just about straight prompt engineering; they're not just about figuring out the most efficient wording to get the model to do what you want. They also draw on deeper, more nuanced questions. What is the most responsible behavior to cultivate in the model, and how do we best deploy this technology in the real world to maximize its positive impact? What new forms of intelligence or creativity can we detect in these strange entities? How do we endow them with a moral compass, or steer them away from bias and inaccurate stereotypes? Can language alone generate a robust theory of how the world works, or do you need more explicit rules or additional sensory information?

All of those questions have been absolutely central to the discussion of AI for the past two years, but if you think about it, they were all questions that belonged to the humanities until the language models came along: ethics, philosophy of language, political theory, history of innovation, and so on.

I don't want to carry this argument too far. Some of my training as a writer has come in useful in creating NotebookLM, through the design of our core prompts and the overall “voice” of the product. But Notebook itself would not exist without the exceptional engineering talent of our extended team, from our front-end and back-end programmers to the people who built the enormously complex infrastructure of the models themselves. Perhaps someday it will be possible for a code-illiterate person like myself to conjure an entire application into being just by describing the feature set to a language model, but we are not there yet. And of course building the models themselves will almost certainly continue to require skills that are best honed in engineering and computer science programs, not writing seminars.

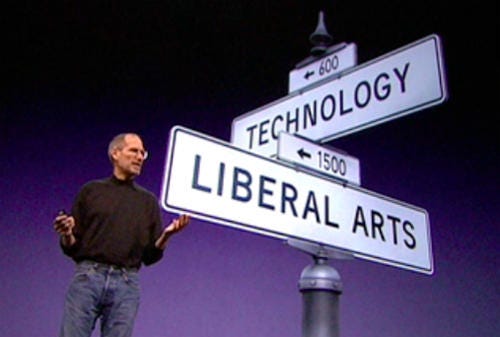

But I do think it is undeniable that the rise of AI has ushered humanities-based skills into the very center of the tech world right now. In his last product introduction before his death, Steve Jobs talked about Apple residing at the intersection of the liberal arts and technology; he literally showed an image of street signs marking that crossroads. But the truth is back then most of the travelers on the liberal arts avenue were designers. There wasn't as much need for philosophers or ethicists or even writers in building the advanced consumer technology of that era. But now those skills have a new relevance.

There's a wonderful illustration the kinds of skills that are now at a premium in the conversation I had a few days ago with Dan Shipper for his AI & I podcast (best viewed on video so you can see what's happening on screen.) Dan had me on the show—formerly called How Do You Use ChatGPT?—to walk through some of the new features that we just launched yesterday, in addition to our international rollout, and to generally just get a sense for how I have integrated NotebookLM into my creative workflow. He likes to use each episode to try to create something spontaneously with the guest —it's very live and unscripted, which is always a little nerve-wracking when you are working with new features (not to mention stochastic language models.) But in this case it made for a beautiful collaboration.

The sample use case I brought to the show was a notebook that I had filled largely with interview transcripts from the NASA oral history project. The notebook has something like 300,000 words of interviews with astronauts, flight directors, and other folks from the Apollo and Gemini programs. (That may sound like a lot, but you can now have up to 25 million words worth of sources in a single notebook.) I also had a few slide decks in there with images, since NotebookLM now supports Slides and has true imagine/chart/diagram understanding as well. We also, as you will see, ended up diving into my collection of reading quotes, which I maintain in a separate notebook. Dan and I decided that we would try to use this notebook to gather ideas for a potential documentary project about the Apollo 1 fire that tragically killed three astronauts in early 1967. I recommend watching the video starting around the thirty-minute mark where we really dive into the exercise -- I think it's probably the best example to date of the kind of high-level creative and conceptual work that NotebookLM makes possible, where the software is truly helping you make new connections and synthesize information far more easily than would have been possible before.

But the thing I also want to draw your attention to is how much Dan is driving the process, by suggesting a series of prompts that ultimately elicit some astonishing—even to me—results from NotebookLM. I was going into it more or less planning on showcasing NotebookLM's ability to extract and organize a complex string of facts out of a disorganized collection of source material, like creating a timeline of all the events associated with the fire, or suggesting key passages that I could read to understand the impact of the fire. (All with our new inline citations, which are pretty amazing in their own right.) But at a certain point, Dan really takes the wheel, and says, effectively: "This is a Steven Johnson project, and so it's got to have some surprising scientific or technological connection that the reader/viewer wouldn't expect; let's ask NotebookLM to help us find that angle." And then we just go on a run—again, largely driven by Dan's prompting—that takes us to some pretty amazing places, and even generates the opening lines of a script by the end of it.

What you can see in this sequence are two things: 1) a remarkably capable language model doing things with a large corpus of source material that would have been unthinkable just a year ago really—but just as importantly 2) a very smart human being who knows how to probe the source information and unlock the skills of the language model to generate the most useful and interesting results. The skill that Dan displays here is basically all about being able to think through this problem: Given this body of knowledge, given the abilities and limitations of the AI, and given my goals, what is the most effective question or instruction that I can propose right now? I don't know whether you're better off with a humanities background or an engineering background in developing that talent, but I do believe it has become an enormously valuable talent to have.

The other thing worth noting in the exchange—and I take a step back to reflect on it in the middle of the exercise—is the range of intelligences involved in the project. On the one hand you have the intelligence of all the astronauts and flight directors contained in the interview transcripts themselves; you have the intelligence of all the authors whose quotes I have gathered over the past two decades of research and reading; you have the intelligence of two humans who are asking questions and steering the model's attention towards different collections of sources, crafting prompts to generate the most compelling insights; and then you have the model itself, with its own alien intelligence able somehow to take our instructions and extract just the right information (and explain its reasoning) out of millions of words of text. I used to describe my early collaborations with semantic software as being like a duet between human and machine. But these kinds of intellectual adventures feel like a chorus.

This idea of the model not as a replacement for human intelligence, but instead tool for synthesizing or connecting human intelligence seems to be gathering steam right now, which is good to see. The artist Holly Herndon made a persuasive case for calling artificial intelligence "collective intelligence" in a recent conversation with Ezra Klein. My friend Alison Gopnik has been talking about AI for a long while as a "cultural technology," which adds weight to the prediction that humanities skills will have increasing relevance in a world shaped by such technologies. In a recent conversation with Melanie Mitchell in the LA Review of Books, Alison argued:

A very common trope is to treat LLMs as if they were intelligent agents going out in the world and doing things. That’s just a category mistake. A much better way of thinking about them is as a technology that allows humans to access information from many other humans and use that information to make decisions. We have been doing this for as long as we’ve been human. Language itself you could think of as a means that allows this. So are writing and the internet. These are all ways that we get information from other people. Similarly, LLMs give us a very effective way of accessing information from other humans. Rather than go out, explore the world, and draw conclusions, as humans do, LLMs statistically summarize the information humans put onto the web.

It’s important to note that these cultural technologies have shaped and changed the way our society works. This isn’t a debunking along the lines of “AI doesn’t really matter.” In many ways, having a new cultural technology like print has had a much greater impact than having a new agent, like a new person, in the world.

Another way to put that—which I will probably adapt into a longer piece one of these days—is that language models are not intelligent in the ways that even small children are intelligent, but they are already superhuman at tasks like summarization, translation (both linguistic and conceptual), and association. And when you apply those skills to artfully curated source material written by equally, but differently, gifted humans, magic can happen.

PS: As a little bonus treat, here’s a glimpse of our new inline citations (with image/chart understanding) live in my NASA notebook. You can see how NotebookLM answers a question about NASA budgets by drawing information from a chart included in a slide deck, and actually cites the chart itself in the citation.

And here’s a very sophisticated French notebook, part of our expansion to more than 200 countries and territories around the world:

love this tool

As high we can rise in our technological progress, we cannot escape the fundamental questions of life. When the self has mastered some dimensions of the materialistic world, the psyche will yearn for its rightful place. This is where the humanities comes in.

I have been toying with Notebook since it became available in Haiti. The portal of possibilities it helped me discover in my own creative thinking is transformative.